How to Configure a Remote Data Store for Prometheus

Introduction

This article is part of a series on setting up an end-to-end monitoring and alerting stack using Prometheus.

The Prometheus monitoring tool can store its metrics either locally or remotely. You can configure a remote data store using the remote_write configuration.

This article describes the various data store options available as well as how to set up a remote store.

Overview of Remote Storage

By default, Prometheus stores data locally wherever it is installed. The data directory can be configured by using the --storage.tsdb.path command line option when starting Prometheus.

In practice you can use a separate disk for higher performance attached to the machine where Prometheus is running.

However, this may not be possible or optimal in all situations as you might want a data store that is more suited for time series data, and has larger storage capabilities for higher data retention. Prometheus would usually run in a standalone VM or a Kubernetes pod or a Docker container, and it would not have access to such data stores by default.

A remote store can add these capabilities to Prometheus. The remote storage option can be set by using the remote_write key in the Prometheus configuration YAML file.

- Introduction

- Overview of Remote Storage

- Remote Store Architecture

- Remote Store Configuration

- Remote Storage Options

- Troubleshooting

- Best Practices

- Conclusion

- FAQ

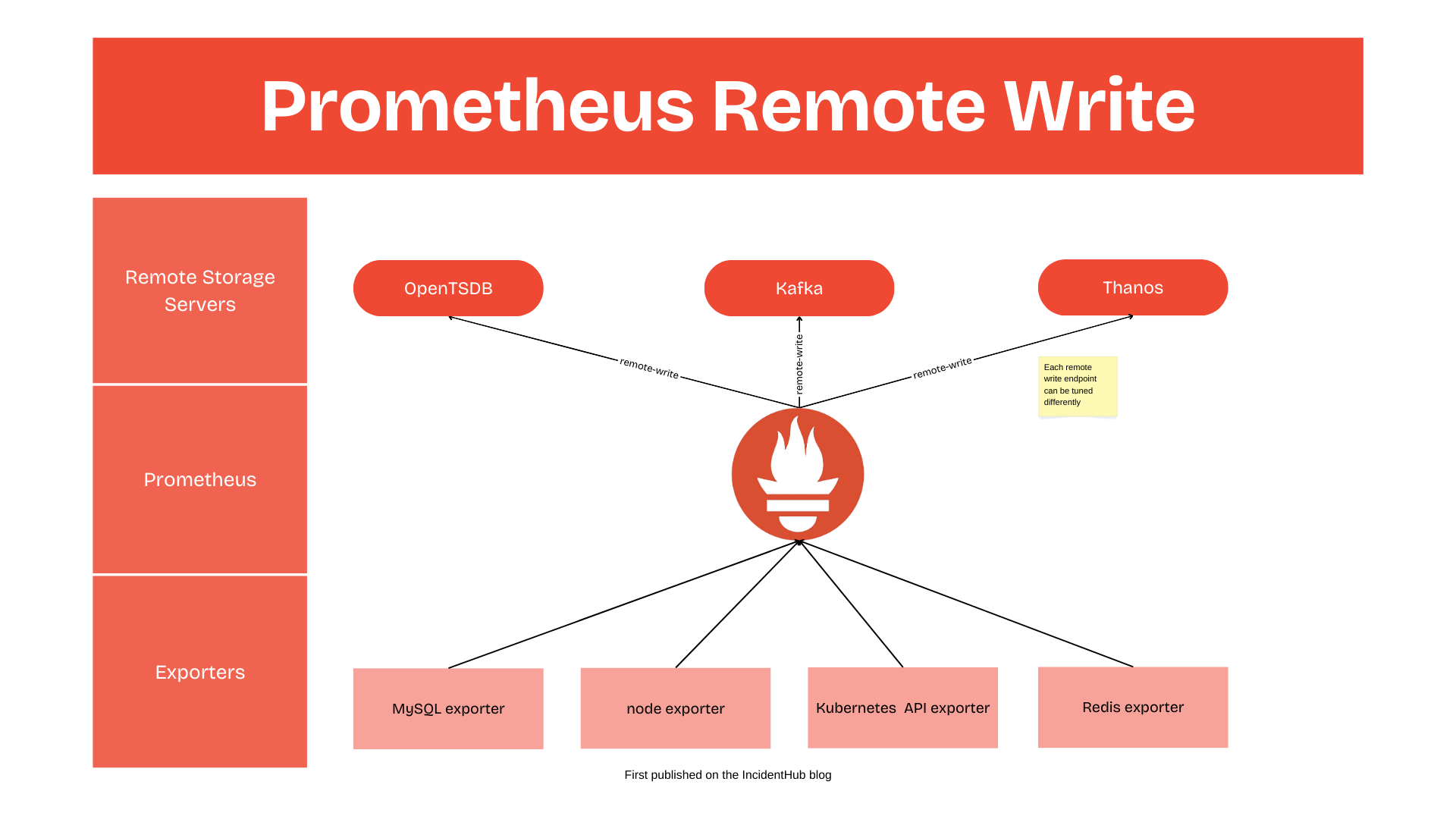

Remote Store Architecture

Remote Store Configuration

Basic Syntax

A very simple configuration for a remote store that accepts unauthenticated connections would look like this:

remote_write:

- url: "http://192.168.23.4/api/v1/write"

name: "production-metrics"

You can have multiple remote_write sections in the same Prometheus configuration.

Based on your requirements and the features supported by the remote write server you can configure other options. Let us look at them one by one.

Security and Authentication

To protect your metrics data in transit whether it is traveling via your internal network or through the internet, you can enable both TLS as well as authentication. The remote store server should support these options.

# Remote write configuration for Prometheus

remote_write:

- url: "https://prometheus-data-store.mydb.io/api/v1/write"

name: "production-metrics"

headers:

Authorization: "Bearer <token>"

basic_auth:

username: "prometheus"

password: "secret-password"

tls_config:

insecure_skip_verify: false

ca_file: "/path/to/ca.pem"

cert_file: "/path/to/cert.pem"

key_file: "/path/to/key.pem"

This sample configuration does the following:

- Adds a

Bearertoken for authentication, as well as basic auth options. In practice you would use only one of these. - Adds a

tls_configassuming you have a custom CA which has issued the certificates for the remote store's server. If it's a certificate issued by a well-known CA, you would not have to configure this. This option would come in handy when you have a private CA.

You can also create a separate authorization section for more options while setting the Authorization header. Note that the options below are mutually exclusive - the example is only for illustration.

# Example 1: Default Bearer type with direct credentials

authorization:

type: Bearer

credentials: "eyJhbGciOiJIPoI1NiIsInR5cCI6IkpXVCJ9..."

# Example 2: Bearer type with credentials from file. This is mutually exclusive with credentials_file

authorization:

type: Bearer

credentials_file: "/etc/prometheus/token.txt"

# Example 3: Custom type with direct credentials

authorization:

type: CustomAuth

credentials: "secret-token-123"

Remote Write Protocol Configuration

As of this writing, the remote write specification is undergoing a change.

You probably don't have to worry about this section unless you are optimizing for very specific cases. You can configure the protobuf_message object that Prometheus uses when sending metrics.

This depends on what your remote store server supports.

remote_write:

- url: "http://192.168.23.4/api/v1/write"

name: "production-metrics"

protobuf_message: prometheus.WriteRequest

Network Configuration

Based on the properties of your remote store server, you can tune some functional settings.

The remote_timeout key sets the timeout for requests to the remote write endpoint. The default value is 30s. You would not need to set this unless you have

a noisy network, or there are shorter timeouts in the network path between your Prometheus server and the remote store server.

If your remote store is behind a proxy server, you can configure the proxy details in the YAML.

remote_write:

- url: "http://192.168.23.4/api/v1/write"

remote_timeout: 45s

name: "production-metrics"

# Proxy configuration

proxy_url: "http://proxy.internal:4200"

proxy_connect_header:

"Proxy-Authorization": ["Basic xxxxxxxxxxxxxxxxxxxx"]

"X-Custom-Proxy-Header": ["app1", "app2"]

proxy_from_environment: false

follow_redirects: true

enable_http2: true

Metrics Configuration

You can use a relabel_config key to modify or drop specific metrics before they are written to the remote store. The relabel syntax is identical to that used in the scrape_config section. You might want to do this if:

- You have multiple remote stores and want specific metrics to go to specific stores to avoid unnecessary storage costs.

- You have one remote store but don't want certain metrics to be written there but let them remain with Prometheus' local storage.

remote_write:

write_relabel_configs:

- source_labels: [__name__]

regex: 'test_metric.*'

action: drop

- source_labels: [environment]

regex: 'staging'

action: drop

Queue Configuration

The queue_config has settings to fine tune the queue that is used to write to remote storage. Prometheus creates an internal queue for each

remote write server. As it collects metrics, Prometheus maintains a write-ahead log (WAL) that it can replay if there's a crash. Each remote destination

queue picks up metrics data from the WAL and sends it to the remote store server. Each queue can also have multiple shards, which can be used to configure the amount of

parallelism for each queue.

You will have to to tune the queue settings only if you have a very high volume of data and/or are facing issues with the remote store struggling to keep up with your Prometheus server.

You can check out these great writeups on tuning the queue settings for remote_write.

- https://grafana.com/blog/2021/04/12/how-to-troubleshoot-remote-write-issues-in-prometheus/

- https://last9.io/blog/how-to-scale-prometheus-remote-write/

Remote Storage Options

A non-exhaustive list of software that supports the Prometheus remote write protocol includes:

- Thanos

- VictoriaMetrics

- Splunk

- OpenTSDB

- Kafka

- InfluxDB

- Google BigQuery

Troubleshooting

Prometheus failing to write to the remote storage

This can be caused by a number of issues:

Network connectivity between Prometheus and the remote store

Check if you can reach the remote store using ping or curl.

If there is a proxy in between, it might be dropping packets or might not be running

Check if the proxy is running. Verify that the proxy configuration as well as the Prometheus remote_write proxy settings are correct. Check the proxy server's logs for any errors.

The proxy might be blocking large packets.

Requests are timing out due to network issues

Run a traceroute from your Prometheus server to the remote store to see if packets are being dropped.

Requests are timing out due to the remote store not being able to keep up

Tune the queue configuration. If this happens suddenly, it's important to find out the root cause.

- The number of metrics might have increased due to autoscaling events or an increase in cardinality.

- The remote store might have disk issues.

Best Practices

- Backup your data in the remote store.

- Add security and authentication between your Prometheus and the remote store server. If your remote store does not support this natively, you can add a proxy like nginx in between and configure it to have TLS and authentication.

- Monitor your remote store metrics for indications of trouble.

- If you are in a regulated industry, ensure that your remote store is compliant with your requirements. E.g. if it's managed by a cloud vendor, ascertain that their security credentials are sufficient for your needs.

Conclusion

The remote store functionality in Prometheus offers a scalable and flexible way of adding a dedicated storage backend for Prometheus metrics. You can use the remote store for increased data retention, durability of data, and offline data analysis.

FAQ

What is remote storage in Prometheus?

An external system to store Prometheus metrics in addition to using local storage.

Why would I need Prometheus remote storage?

For longer data retention, larger storage capacity, increased durability, and the ability to use dedicated time-series storage systems.

How do I configure Prometheus remote storage?

Add a remote_write configuration to your Prometheus configuration YAML. For more details, see the sections above.

What Prometheus remote storage options are available?

There are many options including but not limited to Thanos, VictoriaMetrics, Splunk, OpenTSDB, Kafka, InfluxDB, and Google BigQuery.

How can I secure my Prometheus remote storage connection?

Use TLS encryption, basic auth, or bearer tokens.

Can I send different metrics to different Prometheus remote stores?

Yes, you can use write_relabel_configs in individual remote_write sections to filter metrics.

What should I do if Prometheus remote writes are failing?

Some things to check are network connectivity, proxy settings, remote store performance settings, and queue configuration.

What are the best practices for Prometheus remote storage?

Backup your data, implement security between Prometheus and the remote store server, monitor the remote store's key metrics, and ensure compliance with your regulatory requirements.

Q: Can I have multiple Prometheus remote storage destinations?

Yes, you can configure multiple remote_write sections.

You might also like:

- A Beginner's Guide To Service Discovery in Prometheus

- Adding a Grafana Dashboard to Your Prometheus Setup

- Sending Alerts Using Prometheus and Alertmanager

- Deploying Prometheus With Docker

This article was originally published on the IncidentHub blog.

All product names, company names, logos and trademarks are the property of their respective owners.